I am currently a Robotics Engineer in the Autonomy team at Bgarage. I work on deploying software to drone hardware and QA simulation testing.

I graduated with a Masters in Mechanical Engineering from the University of Illinois at Urbana Champaign. I was a graduate research assistant in the Reliable Autonomy Group @ UIUC, focusing on mobile robotics with perception applications. I enjoy full stack robotics projects, working with both software and hardware.

In my free time I love to build and ride bikes.

News

| Date | Update |

|---|---|

| 2025-02-28 | Paper 'Lyapunov Perception Contracts for Operating Design Domain' accepted to L4DC 2025. |

| 2025-09-12 | Started Robotics Engineer position at Bgarage AI. |

| 2024-04-10 | Video presentation uploaded for ICRA 2024. |

| 2024-01-29 | Paper 'Learning-Based Perception Contracts and Applications' accepted to ICRA 2024. |

Past Projects

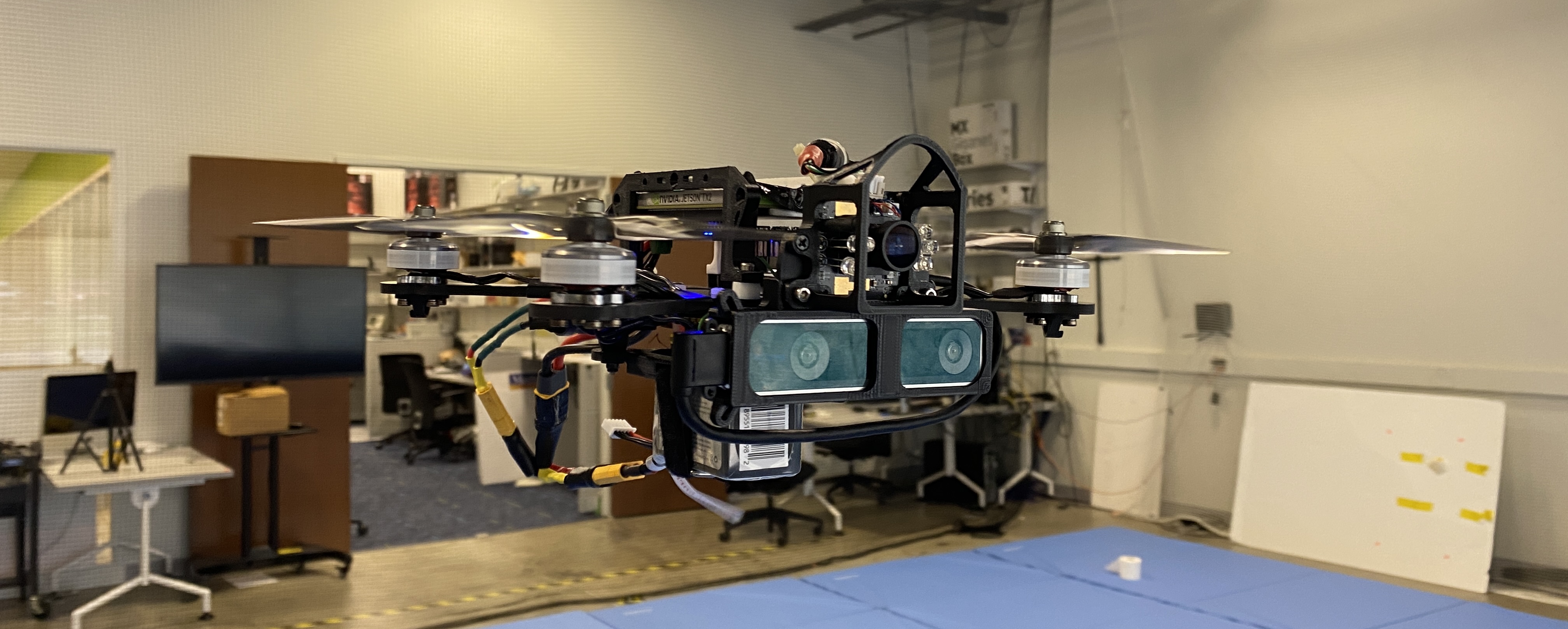

Visual Tracking with Intermittent Visibility

Fall 2024

“Visual Tracking with Intermittent Visibility: Switched Control Design and Implementation”

Yangge Li, Benjamin C Yang, and Sayan Mitra

arxiv link

Designed a Switched Visual Tracker (SVT) for drones that alternates between tracking and recovery modes to maintain target proximity and visibility despite intermittent vision loss. Using switched systems theory, we proved stability and optimized parameters for performance, achieving up to 45% lower tracking error and longer visibility than baseline methods on our custom 5inch quadcopters.

UAV Simulation: Vision-based Closed Loop Navigation

Fall 2023

“Lyapunov Perception Contracts for Operating Design Domain”

Yangge Li, Chenxi Ji, Jai Anchalia, Yixuan Jia, Benjamin C Yang, Daniel Zhuang and Sayan Mitra

L4DC 2025

paper link

This work introduces Lyapunov Perception Contracts (LPC), a framework for specifying and verifying the stability of visual control systems that rely on deep learning for perception and state estimation. LPCs bridge the gap between complex environmental effects (e.g., lighting, weather) and the lack of formal specifications for neural networks. The method synthesizes stability guarantees from data and system models, and identifies safe operating domains for visual controllers. We demonstrated the use of the contract for automated landing system using both simulated and Google Earth imagery.

UAV Landing Using Perception Contracts

Spring 2023

“Learning-Based Perception Contracts and Applications”

Dawei Sun, Benjamin C. Yang, Sayan Mitra

ICRA 2024

arXiv link

Developed an Inverse Perception Contract (IPC) framework that learns to bound perception errors from data and uses these bounds for safe control. We applied this to a quadcopter vision-based-landing pipeline, the IPC-enabled controller achieved reliable autonomous landings despite perception inaccuracies, outperforming a baseline that failed under the same conditions.

ME446 Project: 6DOF Manipulator Scripting

Spring 2023

Created C++ scripting for 6 DOF manipulator using task space PD and feed forward control tracking, force control, and impedance control.

ECE484 Project: Carla Simulator

Fall 2022

Developed python-based aggressive and real-time controller for autonomous racing in Carla simulator using PD control. Created custom PRM-variant for dynamic obstacle avoidance.

ME461 Project: Segbot

Fall 2024

C program implementation of balancing segbot on Texas Instruments LAUNCHXL-F28379D.

Detail Build | GitHub Link